This is a story all about

How my life got twist-turned upside down

And I liked to take a minute and sit right there

And tell you how I became the failure king of MSU!

Introduction

Quick introduction. I’m Greg. I’m a seasoned network engineer. I was not one during this story. This is also my first blog post ever. I welcome you to connect with me on Twitter and LinkedIn.

Background

In August 2004 I was hired into the Network Services team at Mississippi State University(MSU). Within a month of me being hired we received a large shipment of Cisco Catalyst 2950s. We had 48 port and 24 port switches and Gigastack modules out the you know what. I eventually began to despise those little things, but that’s a different story. By the spring of 2005 we had the old ATM OC-12 gear removed an replaced with a wonderful 1Gbps Ethernet network. And things were humming right a long without much issue. In 2006 we had a notification of a vulnerability in the firmware we were running on the Catalyst 2950s. The boss said, “Greg, it’s time we upgraded our switches. Get on that!”. And this is where our story begins.

Setup and programming

Working at a University has some interesting challenges. Budget is the biggest of those challenges. Because our budget was almost non-existent we had to do things on our own. Prior to me coming onboard, the previous folks had written a whole host of Perl scripts to automate all kinds of things. One of them was NOT upgrading 800+ switches. I was not about to log into 800 switches do this manually. Go go gadget automation. Yes…I was doing automation before it was cool. It was however done in Perl. With my C/C++ background it did not provide much of a challenge to get the syntax down correctly. So, I grabbed a spare 48 port switch off the shelf and configured it like a production switch. I then started working on my Perl script. Using Expect I started working out what all needed to be done. Here is a list of steps I thought I needed to perform:

- Connect to switch and log into it

- Determine space left on flash

- If not enough space left for new image, delete old image

- Upload new image

- Change boot configuration

- Reload

If you’re a quick study, you may have already found my flaw. If not….keep reading.

Testing

Using the configured test switch I performed upgrade after upgrade after upgrade without issue. I tested everything I could think of. Minus one thing that will become clear later. I had a coworker test it. It worked for him too. To keep things moving while switches reboot we need to upgrade the farthest out switch and work our way back in. A coworker had a script that would build this for us. So, while we worked on the farthest nodes we could also be working on the closer ones. Just to make sure we didn’t reboot an upstream switch while upgrading a downstream switch we separated each of these node levels into separate files. Running the script on the individual files would upgrade the switches in that file. Easy enough. We’ve tested. We’ve prepared the tree level files. Let’s do this!

Implementation

A coworker and I met up at 22:00 one Tuesday Evening to begin the network migration. Our outage windows were from then until 06:00. Let’s get started! Away we went. First run went without a hitch. We started slow. Had a node level file with 1 switch in it. Upgraded just fine. So, then we started branching out. More and more switches began to upgrade. As I stated earlier, we would begin working on the next level up as the last switch in the level below began its reboot. Our monitoring system was seeing the switches go away and some of them come back. The ones not coming back were random and all over the place. I first thought our monitoring system was just missing them because of uplink switches rebooting. After a while I began to get nervous that something might be wrong. We had made it through over 50% of the switches. Trucking right a long. I told my coworker I was heading to the building next door since it had a switch that had not come back yet. I took a laptop and headed that way. He continued working through the switches we had left.

Disaster strikes

I plug the laptop into the console port and what does my eyes see? ROMMON!! That’s right folks, I’m sitting at the ROMMON prompt. If you’re not familiar with this, the ROMMON is the boot loader for the Cisco firmware. It’s similar to the BIOS on a computer and will load the firmware that is configured. I’ve seen this before. No big deal, a configuration issue probably didn’t allow it to boot. Let’s check the config register. 0x2102 is staring back at me. For those unware, this means the following and is the standard booting config register:

- Ignores break

- Boots into ROM if initial boot fails

- 9600 console baud rate default value for most platforms

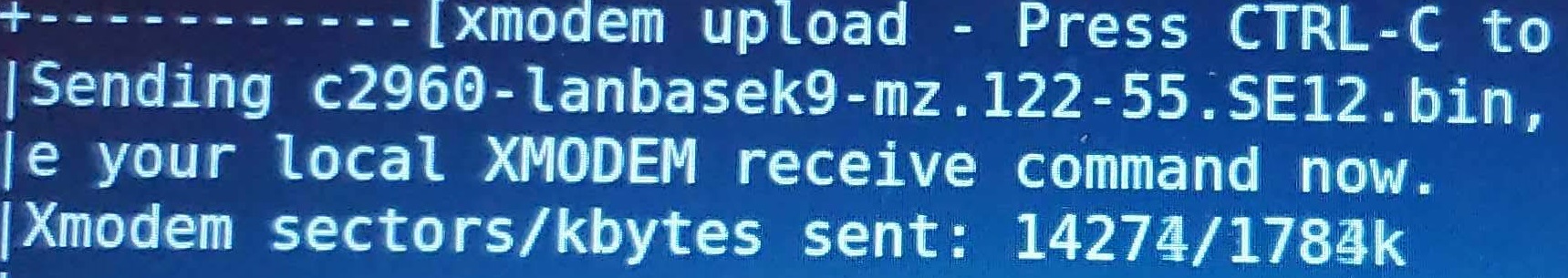

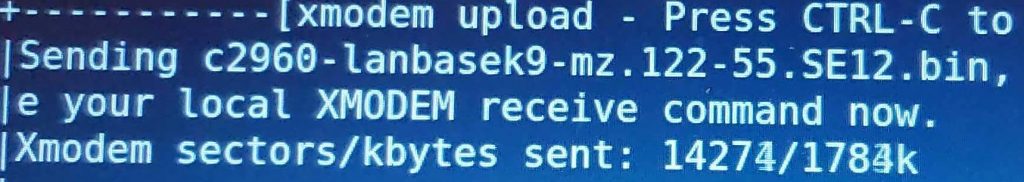

Ok. That’s right. That’s what’s supposed to be there. Odd that it didn’t boot. Ok, I’ll just issue boot bootflash:/<image name> Since I didn’t know the image off the top of my heart I issued the dir command. What was returned made my heart sink to the pit of my stomach and made me almost vomit. It was gut wrenching. It was one of the worst moments I’ve ever had in my entire life. I realized that the image was NOT on the switch. I immediately called my coworker and had him stop any more upgrades. We let the ones running finish. I came back to the office to regroup. I knew I had only one choice. Xmodem/Zmodem an image onto the switch via the console cable. I downloaded the image to my laptop and went next door. It didn’t work. I started freaking out. Then I realized the image size was bigger than the flash. I was like, what? How is that possible? I got this image directly from Cisco. I quickly found a smaller image, but it wasn’t the most recent one. But, it worked. It was taking too long though. No way I could hit all of the switches that were down. While I was uploading, my coworker assessed the damage. I don’t remember the exact count now, but it was not a small number. It was roughly 2am at this time. I bite the bullet and call my boss. Frank was not happy, but he understood. He mashed the all hands button, so I started calling my coworkers to come in and assist. While I was waiting for them to arrive I started uploading the image to other switches.

Response

This is where we stood when all hands arrived on deck. We had 2 master keys, 5 laptops and 8 workers. I had already figured out changing the baud rate to 19200 increased the speed and took about 15 minutes to upload the correct image. So, we started the process of fixing the issue. Frank did what a great manger/leader would do and he put himself between me and upper management and maintained some form of order during the outage. I worked until about 15:30 that day. When I walked back into the office I was a zombie. Frank looked at me and said I should go home, that they could finish the handful that were left. I probably asked him 10 times if he was certain he didn’t need me to go fix more of the switches. He assured me that they could handle it and that I should go home. I crashed hard when I made it to the apartment. The whole time I was terrified that I had worked my last paycheck at MSU.

Aftermath and Lessons Learned

The following day I made it into work bright and early and finished what we called a “Netlog”. This is a form of RCA. But not fully what you would consider a true RCA. It was just a log of what happened. I figured I could get that done before I was let go for my incompetency. When Frank came in he walked into his office without saying a word to me. I was terrified at this time. I began gathering up what few belongings I had in my office and started copying some stuff off that I needed off of my PC. Then I heard it….”GREG! Get in here!” Oh no….here we go. My heart was racing. I just knew that this was the end. I walk in and shut the door. Frank just looks at me and says, “So….what did you learn last night?” I blinked. I wasn’t certain what to say. I told him I learned how NOT to automate a switch upgrade of nearly 800 switches. He laughed and said “Yeah, you got that right.” He then asked if I knew what exactly happened. I then began explaining how my script worked and how I had tested it. I then told him I knew exactly how to solve the bug so it would never happen again. He said “Good, now go back to your office and don’t touch ANYTHING today.”

I learned that no matter what, triple check the size of the flash before doing anything to it. If I had of grabbed a 24 port switch and not a 48 port switch I would have known that from the beginning. For whatever reason, the 24 port Catalyst 2950s had a smaller flash than the 48 port switches. This was not knowledge to me at the time. I also didn’t realize that Cisco would provide a 2950 image that would not actually fit on a Catalyst 2950. I eventually fixed the script and ran the upgrade successfully. After proper testing of course!

TLDR;

Always check the size of the flash on your network devices before you delete the current firmware image. The new firmware may not actually fit on the device. I found that out the hard way and had to manually upload the firmware image using Zmodem and a console cable. Thankfully did not get fired.